The Inefficient Architecture

Have you ever heard the adage "premature optimization is the root of all evil"? It's quite possible you have and you've also probably been taught you should only optimize actual bottlenecks and leave the optimization until the end.

In the paper that originated the phrase "Structured Programming with go to Statements", Donald Knuth mentions how programmers waste enormous amounts of time worrying about the speed of noncritical parts of their programs. He also adds that while we should worry about the performance of the critical parts, our intuitive guesses about the importance of different parts tend to be incorrect. Thus, we should rely on actual tools to make these judgments.

Sometimes this leads to the extreme position that there is no point in considering the performance impact of anything until you have actual data on the real-world performance. This may, however, end up foregoing all optimization until it is too late.

Time to ship is a woeful optimization goal

Not too long ago, I watched an insightful talk by Konstantin Kudryashov on Min-maxing Software Costs. To me, one of the most important takeaways from that talk was the fact that we tend to optimize the cost of introduction. That is, how fast it is to write new code and ship applications because that is the easiest to optimize and the easiest to measure.

Because of that, we tend to build frameworks and layers that provide convenience by hiding actual implementations and making many operations implicit or lazy. The problem is that we often end up setting up traps for ourselves because we don't either fully understand the underlying mechanics or we simply forget because the frameworks make everything too transparent.

The N+1 problem is the first sign of things to come

A typical problem caused by the convenience provided by numerous different frameworks is the N+1 query problem. If you are unfamiliar with this particular issue, let me give an example:

Let's imagine we have users and each belongs to one organization. We want to print each user and their organization. With a typical ORM/DBAL implementation, we could have code that looks something like this:

$users = $userRepository->findAllUsers();

foreach ($users as $user) {

echo $user->getName() . ', ' . $user->getOrganization()->getName();

}

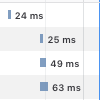

The most common problem with the previous example is that while findAllUsers() fetches all the users from the database using a single query, it doesn't fetch the organizations related to each user. The method call getOrganization() is actually a lazy loading function that queries the database for the organization that is related to the user. What ends up happening in practice is that we make one additional query per each individual user. So, the total number of database queries we end up making is 1 + N (where N is the number of users).

The appropriate solution would be to eagerly load all the organizations for each user. This could be done either by using a simple JOIN query or doing a second query with ids for each organization. The best optimal solution depends on the number of users per organization and your preferred framework. Although, in this particular case you may also just want to fetch the name fields specifically without fetching entire entities.

Convenience is the enabler of bad performance

In my honest opinion, the above example shouldn't even be possible. The code should throw an exception because the organizations have not been initialized for the users in the first place. However, because pressure from schedules encourages us to optimize how quickly we can write code, frameworks provide functionality that automatically handles these relationships for us.

You might be inclined to think you would easily catch issues like the above, but real-world examples don't tend to be quite as straightforward. You might have code in another place that fetches database entities, and then somewhere else 20 layers deep, you have another piece of code that uses relations in an unexpected way which triggers additional queries.

The N+1 problem is merely the simplest case to demonstrate that convenience features create performance problems in applications. The core issue of the problem lies in the fact that passing data around different layers of applications is quite difficult. We like to abstract it away behind abstraction layers to make it simpler to reason with but at the same time, we stop thinking about what is actually happening behind the scenes.

I've worked on optimizing several legacy systems that had created massive bottlenecks due to how some application data storage were accessed. In one application, for example, there was a function that was equivalent of Storage::readValue($storageName, $key), which read a single value from the store. However, each time it was called it needed to open and close the external store, but rather than caching the values, each piece of code simply called the static function separately as that was more convenient than figuring out how the data should be passed around different parts of the application.

Be Explicit, Be Performant

Unfortunately, there is no particular silver bullet here. Software architecture is really, really, hard to get right. My own ideology in general, however, is trying to be as explicit as possible in code. When designing frameworks or APIs for libraries, we should do our best not to allow the user to shoot themselves in the foot.

In particular, if a user is doing something that is potentially costly, like accessing IO, we should force the user to be as explicit about it as possible. It should not be possible to just accidentally query a database when accessing an entity relationship. Force the user to think, even for a second, about what they're about to do. This doesn't necessarily mean that APIs should be hard to use. Rather, they should be predictable.

For example, in the previously demonstrated code, the $user->getOrganization() call should not be possible if the organization has not been preloaded for the user. Instead, we should force the user to call something akin to $userRespository->loadOrganization($user).

When you have lazy accessors to database relations, the code becomes surprisingly unpredictable. Simple getters turn into database queries which makes it frustratingly easy to forget, especially for newer developers, that these getters can have a massive performance impact.

Revisiting the topic of premature optimization, these kinds of performance problems are usually created in the initial steps of different projects because little thought is put into how performant different kind of application structures end up being. Once you have a web application that, for example, queries for the same piece of data from the database for couple hundred times in a single request, it can be really hard to fix that kind of structural issues.

I do generally agree that optimization decisions, especially the ones about software architecture, should be based on informed opinions. There is a real danger in getting lost in meaningless micro-optimizations and focusing on the wrong things. However, if your application is inefficient by design, scaling up may become an insurmountable challenge.

Comments